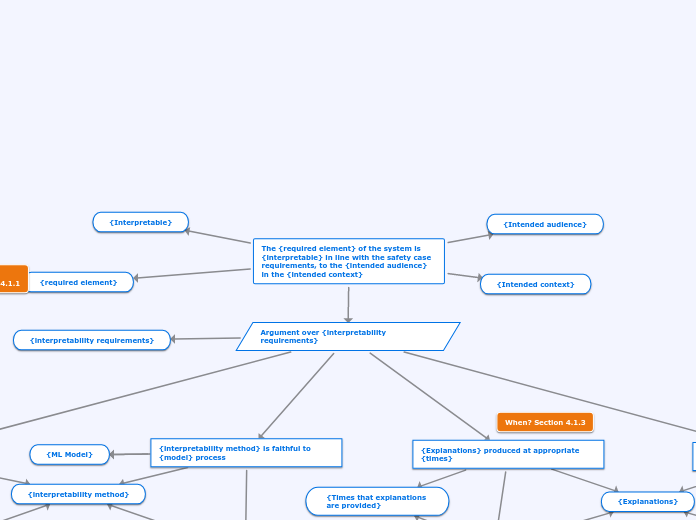

The {required element} of the system is {interpretable} in line with the safety case requirements, to the {intended audience} in the {intended context}

{Interpretable}

{required element}

{Intended audience}

{Intended context}

Argument over {interpretability requirements}

{interpretability requirements}

Implemented {interpretability method} appropriate for {requirements}, i.e. the correct thing is being explained

Argument over {interpretability methods}

{Explainability method

Evidence}

{interpretability method} is faithful to {model} process

Argument over faithfulness of {interpretability method}

{Explainability method

Evidence}

{ML Model}

{Explanations} produced at appropriate {times}

Argument over appropriateness of {explanations} {time}

{Time Evidence}

{Times that explanations

are provided}

{Explanations} are appropriate for {audience}

Argument over appropriateness of {explanations} to {audience}

{Audience Evidence}

{Audience}