Google GCP ACE

Cloud Computing

Cloud Computing -

Cloud Computing - Google Cloud

GCP Solutions Gallery

Docs

Best Practices

Best Practices for enterprise organisations

Organisational setup

Define your resource hierarchy

Create an organisation node

Specify your project structure

Automate project creation

Use Cloud Deployment Manager, Ansible, Terraform, or Puppet

Automation allows you to support best practices such as consistent naming conventions and labeling of resources

Identity and Access management

Manage your google identities

We recommend using fully managed Google accounts tied to your corporate domain name through Cloud Identity

Cloud Identity is a stand-alone Identity-as-a-Service (IDaaS) solution

Federate your identity provider

Synchronize your user directory with Cloud Identity

Migrate unmanaged accounts

Control access to resources

Authorize your developers and IT staff to consume GCP resources

Rather than directly assigning permissions, you assign roles. Roles are collections of permissions

Apply the security principle of least privilege

Cloud IAM enables you to control access by defining who (identity) has what access (role) for which resource.

Delegate responsibility with groups and service accounts

We recommend collecting users with the same responsibilities into groups and assigning Cloud IAM roles to the groups rather than to individual users.

Define an organisation policy

Use the Organization Policy Service to get centralized and programmatic control over your organization's cloud resources. Cloud IAM focuses on who, providing the ability to authorize users and groups to take action on specific resources based on permissions. An organization policy focuses on what, providing the ability to set restrictions on specific resources to determine how they can be configured and used.

Networking & Security

Logging,monitoring, and operations

Cloud Architecture

Plan your migration

Favour managed services

Use GCP managed services to help reduce operational burden and total cost of ownership (TCO).

Design for high availability

To help maintain uptime for mission-critical apps, design resilient apps that gracefully handle failures or unexpected changes in load.

Plan your disaster recovery strategy

Billing and management

gcloud

Docs- GCP ACE Study

Useful examples to script & Prepare Data

Billing

Network

Metdata

Architecture

GCP High Level Architecture

Regions & Zones

Network

VPC Network

Google Networks (cloud on air)

VPC is a global resource

VPC networks do not have any IP address ranges associated to them: IP ranges defined for the subnets

Traffic is controlled by firewall rules

Resources within VPC communicate using internal IPV4 (unicast)

Resources with internal IPV4 can communicate with APIs and services

VPC to VPC connections use VPC Network Peering

Connect to hybrid envs. using Cloud VPN or Cloud Interconnect

Create an IPv6 address for a global load balancer

Subnets

Subnets are regional resources

A subnet defines a range of IP addresses

Auto-mode (default) & Custom available

Considerations for auto-mode vs Custom Networks

Auto to custom conversion is one way

Custom mode

Subnets must be created

IP address ranges must be specified

Regions must be specified

Working with Subnets

Private/ Reserved IP address Ranges

Private

10.0.0.0 -10.255.255.255(private)

172.16.0.0 - 172.31.255.255 (private)

192.168.0.0-192.168.255.255 (private)

Reserved

0.0.0.0-0.255.255.255 (Devices cant communicate in this range)

127.0.0.0-127.255.255.255 (loopback testing)

169.254.0.0 - 169.254.255.255 (APIPA only)

VPC Network Peering

VPC Network Peering enables you to peer VPC networks so that workloads in different VPC networks can communicate in private RFC 1918 space. Traffic stays within Google's network and doesn't traverse the public internet.

Useful for:

Adavantages:

Key Properties

Restrictions:

A subnet CIDR range in one peered VPC network cannot overlap with a static route in another peered network. This rule covers both subnet routes and static routes. GCP checks for overlap in the following circumstances and generates an error when an overlap occurs.

When you peer VPC networks for the first time

When you create a static route in a peered VPC network

When you create a new subnet in a peered VPC network

A dynamic route can overlap with a subnet route in a peer network. For dynamic routes, the destination ranges that overlap with a subnet route from the peer network are silently dropped. GCP uses the subnet route.

Only VPC networks are supported for VPC Network Peering. Peering is NOT supported for legacy networks.

You can't disable the subnet route exchange or select which subnet routes are exchanged. After peering is established, all resources within subnet IP addresses are accessible across directly peered networks. VPC Network Peering doesn't provide granular route controls to filter out which subnet CIDR ranges are reachable across peered networks. You must use firewall rules to filter traffic if that's required. The following types of endpoints and resources are reachable across any directly peered networks:

Virtual machine (VM) internal IPs in all subnets

Internal load balanced IPs in all subnets

You can include static and dynamic routes by enabling exporting and importing custom routes on your peering connections. Both sides of the connection must be configured to import or export custom routes. For more information, see Importing and exporting custom routes.

Only directly peered networks can communicate. Transitive peering is not supported. In other words, if VPC network N1 is peered with N2 and N3, but N2 and N3 are not directly connected, VPC network N2 cannot communicate with VPC network N3 over VPC Network Peering.

You cannot use a tag or service account from one peered network in the other peered network.

Compute Engine internal DNS names created in a network are not accessible to peered networks. Use the IP address to reach the VM instances in peered network.

By default, VPC Network Peering with GKE is supported when used with IP aliases. If you don't use IP aliases, you can export custom routes so that GKE containers are reachable from peered networks.

**overlapping subnets

Peering will not be established if there are overlapping subnets at time of peering

When a VPC subnet is created or a subnet IP range is expanded, GCP performs a check to make sure the new subnet range does not overlap with IP ranges of subnets in the same VPC network or in directly peered VPC networks. If it does, the creation or expansion action fails.

Alias IP

Google Cloud Platform (GCP) alias IP ranges let you assign ranges of internal IP addresses as aliases to a virtual machine's (VM) network interfaces. This is useful if you have multiple services running on a VM and you want to assign each service a different IP address.

CIDR

CIDR Notation (YouTube)

Links

Protect your connectivity(You Tube)

Cloud CDN

CDN Interconnect

Cloud Interconnect

Cloud Interconnect with VPN

Cloud Router for VPN

Cloud Interconnect with Direct Connect

Cloud Router

Configuring Special Access

GKE- YouTube

Load Balancing

Choosing a load balancer

Standard Network service tier (Regional)

Premium network service tier (Global)

HTTPS, HTTP, or TCP/SSL

Single anycast IP address

Instances globally distributed

Health checks

IP address and cookie-based affinity

IPv6 and IPv4 client termination

Connection draining

Autoscaling

Monitoring and logging

Load balancing for cloud storage

Cross-region overflow and failover

Overview (lnk)

Google Next

CloudNext19DeepDive

Best Practices

Compute resources

Compute Engine

Launch Check List

Links

Sole Tenant nodes

Change Machine types

**Machine types (pre-defined)

**Custom Machine types

Pre-defined Machine types are named by their CPU counts which are always powers of 2

Custom machine types let you tweak the the pre-defined types, but you can add more RAM per CPU than you get with the 'highmem' machine types unless you use 'extended-memory'

Custom Extensions

Custom-CPU

custom-memory

The Max CPUs available may vary depending on the zone and machine types therein

Only machine types with 1 CPU or an even number of CPUs can be created

Memory must be between 0.9GB 9(min) & 6.5GB (max) per CPU

The Total Memory must be a multiple of 256mb

A Valid machine type = 32 CPUs with 29GB RAM

Static IP

Opt 1: Reserve a new static IP & assign to a new VM

Opt 2: Promote an existing address

Restrictions:

You cannot 'change' the internal IP address of an existing resource

Reserve up to 200 static internal IP addresses per region by default

Only one resource at a time can use a static internal IP address

Use the 'addresses list' sub-command to list static IP addresses available to project

Autoscaling Instances

Autoscaling is a feature of managed instance groups. A managed instance group is a pool of homogeneous instances, created from a common instance template. An autoscaler adds or deletes instances from a managed instance group. Although Compute Engine has both managed and unmanaged instance groups, only managed instance groups can be used with autoscaler.

Autoscaling policy and target utilization

To create an autoscaler, you must specify the autoscaling policy and a target utilization level. You can choose to scale using the following policies:

Average CPU utilization

HTTP load balancing serving capacity, which can be based on either utilization or requests per second.

Stackdriver Monitoring metrics

Managed instance groups

Suitable for stateless workloads( ie frontends, Batch workloads, high throughput)

Each instance in a MIG created from instance template

Benefits

High Availability

Autohealing - in 'running state'

Regional or Zonal Groups

A zonal managed instance group deploys instances to a single zone

A regional managed instance group, which deploys instances to multiple zones across the same region.

Loadbalancing

GCP load balancing can use instance groups to serve traffic. Depending on the type of load balancer you choose, you can add instance groups to a target pool or to a backend service.

Scalability

Managed instance groups support autoscaling that dynamically adds or removes instances from a managed instance group in response to increases or decreases in load. You turn on autoscaling and configure an autoscaling policy to specify how you want the group to scale. Autoscaling policies include scaling based on CPU utilization, load balancing capacity, Stackdriver monitoring metrics, or, for zonal MIGs, by a queue-based workload like Google Cloud Pub/Sub.

Automated updates

Safely deploy new versions of software to instances in a managed instance group. The rollout of an update happens automatically based on your specifications: you can control the speed and scope of the update rollout in order to minimize disruptions to your application. You can optionally perform partial rollouts which allows for canary testing

Groups of preemptible instances:

For workloads where minimal costs are more important than speed of execution, you can reduce the cost of your workload by using preemptible VM instances in your instance group. Preemptible instances last up to 24 hours, and are preempted gracefully - your application will have 30 seconds to exit correctly. Preemptible instances can be deleted at any time, but autohealing will bring the instances back when preemptible capacity becomes available again.

Containers

You can simplify application deployment by deploying containers to instances in managed instance groups. When you specify a container image in an instance template and then use that template to create a managed instance group, each instance will be created with a container-optimized OS that includes Docker, and your container will start automatically on each instance in the group. See Deploying containers on VMs and managed instance groups.

Network

By default, instances in the group will be placed in the default network and randomly assigned IP addresses from the regional range. Alternatively, you can restrict the IP range of the group by creating a custom mode VPC network and subnet that uses a smaller IP range, then specifying this subnet in the instance template.

Demo of MIG capabilities(YouTube)

Unmanaged Instance Groups

Unmanaged instance groups can contain heterogeneous instances that you can arbitrarily add and remove from the group. Unmanaged instance groups do not offer autoscaling, auto-healing, rolling update support, or the use of instance templates and are not a good fit for deploying highly available and scalable workloads. Use unmanaged instance groups if you need to apply load balancing to groups of heterogeneous instances or if you need to manage the instances yourself.

Autoscaling: HTTP(S) LB

An HTTP(S) load balancer spreads load across backend services, which distributes traffic among instance groups.

You can define the load balancing serving capacity of the instance groups associated with the backend as maximum CPU utilization, maximum requests per second (RPS), or maximum requests per second of the group

What is a back end service?

A backend service is a resource with fields containing configuration values for the following GCP load balancing services:

HTTP(S) Load Balancing

SSL Proxy Load Balancing

TCP Proxy Load Balancing

Internal Load Balancing

A backend service directs traffic to backends, which are instance groups or network endpoint groups

The backend service performs various functions, such as:

Directing traffic according to a balancing mode

Monitoring backend health according to a health check

Maintaining session affinity

Architecture

Autoscaling: Network LB

A network load balancer distributes load using lower-level protocols such as TCP and UDP. Network load balancing lets you distribute traffic that is not based on HTTP(S), such as SMTP.

You can autoscale a managed instance group that is part of a network load balancer target pool using CPU utilization or custom metrics. For more information, see Scaling Based on CPU utilization or Scaling Based on Stackdriver Monitoring Metrics.

Caution!:

Autoscaler cannot perform autoscaling when there is a backup target pool attached to the primary target pool because when the autoscaler scales down, some instances will start failing health checks from the load balancer. If the number of failed health checks reaches the failover ratio, the load balancer will start redirecting traffic to the backup target pool, causing the utilization of the managed instance group in the primary target pool to drop to zero. As a result, the autoscaler won't be able to accurately scale the managed instance group in the primary target pool. For this reason, we recommend that you do not assign a backup target pool when using autoscaler.

Autoscaling: Stackdriver

Scale using per-instance metrics where the selected metric provides data for each instance in the managed instance group indicating resource utilization.

Scale using per-group metrics (Beta) where the group scales based on a metric that provides a value related to the whole managed instance group.

App Engine

Choosing the right environment

Standard

Applications instances run in a sandbox, using the runtime environment of a supported language

Standard optimal for (applications)

Written in specific versions of the supported programming languages

Python 2.7, Python 3.7

Java 8

Node.js 8, and Node.js 10

PHP 5.5, and PHP 7.2

Go 1.9, Go 1.11, and Go 1.12 (beta)

With Sudden and extreme spikes of traffic which require immediate scaling

Intended to run for free or v.low cost

Flexible

Application instances run within Docker containers on Compute Engine virtual machines

Flexible optimal for

Applications that receive consistent traffic, experience regular traffic fluctuations, or meet the parameters for scaling up and down gradually

Applications where:-

Source code is written in a version of any of the supported programming languages:

Python, Java, Node.js, Go, Ruby, PHP, or .NET

It runs in a Docker container that includes a custom runtime or source code written in other programming languages.

It uses or depends on frameworks that include native code.

It accesses the resources or services of your Google Cloud Platform project that reside in the Compute Engine network.

Review the appengine comparisons!

Links

Cloud Functions

Kubernetes Engine

kubectl Commands

commands

kubectl get [nodes] [pods]

kubectl describe [nodes] [pods] [deploy]

kubectl apply --fielname=[filename].yml --record=true

kubectl rollout history deploy [deploymentname]

kubectl rollout undo deploy [deploymentname]

kubectl get deploy --namespace [thenamespace]

kubectl get hpa --namespace [thenamespace]

kubectl apply -f ./ping-deploy.yml

kubectl apply -f ./simple-web.yml

kubetctl get pv

kubectl get pvc

kubectl get sc

kubectl get sc [StorageClassName]

kubectl ger rs -o wide (rs=replicasets)

Networking

Service network

kube-proxy

Pod Network

Node Network

Storage

Back end strorage services (S3, GCP Bucket, Enterprise array connect to

Container storage interface (CSI) via a plugin/connector which consumes from the PV Subsystem. This is opensource, the storage providers write their own plugins for the PV subsystem.

Persistent Volume (PV) Storage resource ie 20GB SSD volume

PersistentVolumeClaim(PVC) ['Token' to use PV]

Storageclass (SC)

PV Subsystem

Persistent Volume (PV) Storage resource ie 20GB SSD volume

PersistentVolumeClaim(PVC) ['Token' to use PV]

Storageclass (SC) [Makes it Dynamic]

Dynamic Provisioning w/ Storage Classes

Provisoner is k8s name for the CSI plugin which allows the admin to connect to multiple pre-provisioned storage rescources. (S3/NAS/NFS/GCP Buckets)

Applied using a yaml file

Deployments&Set

Deployments

Let you scale up and down with demand

Daemon sets

A single pod on every node to run background tasks

Stateful sets

Use for ordered pod creation for stateful applications

Autoscaling

Horizontal Pod Autoscaler (HPA)

The HPA Monitors pods (which must have respource requests) if the hpa identifies that resources are being exceeded it modifies the replica count in the deployment.yml to amend the number of pods.

If the cluster is maxxed out and no new pods can be created the required nodes are placed in 'pending' status (not live) due to lack of resources.

Cluster {NODE} Autoscaler (CA)

The cluster autoscaler handles autoscaling of nodes only. The cluster autoscaler is triggered by the pods assigned the pending status, upon identification it willl create a new node to service the pod creation.

Vertical Pod Autoscaler (VPA) (currently NOT GA)

MetaData

Metadata can be assigned at both the project and instance level. Project level metadata propagates to all virtual machine instances within the project, while instance level metadata only impacts that instance.

Default Project & Instance Metadata

'Metadata-Flavor: Google' must be used used in all query headers

Security & Identity

Security Model

Infrastructure Security

IAM

Role Types

Primitive roles

There are three roles that existed prior to the introduction of Cloud IAM: Owner, Editor, and Viewer. These roles are concentric; that is, the Owner role includes the permissions in the Editor role, and the Editor role includes the permissions in the Viewer role.

Owner

Editor

Viewer

Predefined roles

In addition to the primitive roles, Cloud IAM provides additional predefined roles that give granular access to specific Google Cloud Platform resources and prevent unwanted access to other resources.

Custom Roles

In addition to the predefined roles, Cloud IAM also provides the ability to create customized Cloud IAM roles. You can create a custom Cloud IAM role with one or more permissions and then grant that custom role to users who are part of your organization

The 'ETAG' property is used to verify if the custom role has changed since the last request. When you make a request to Cloud IAM with an etag value, Cloud IAM compares the etag value in the request with the existing etag value associated with the custom role. It writes the change only if the etag values match.

Service accounts

In addition to being an identity, a service account is a resource which has IAM policies attached to it. These policies determine who can use the service account.

OS Login

Use OS Login to manage SSH access to your instances using IAM without having to create and manage individual SSH keys

Benefits

Automatic Linux account lifecycle management - You can directly tie a Linux user account to a user's Google identity so that the same Linux account information is used across all instances in the same project or organization.

Fine grained authorization using Google Cloud IAM - Project and instance-level administrators can use IAM to grant SSH access to a user's Google identity without granting a broader set of privileges. For example, you can grant a user permissions to log into the system, but not the ability to run commands such as sudo. Google checks these permissions to determine whether a user can log into a VM instance.

Automatic permission updates - With OS Login, permissions are updated automatically when an administrator changes Cloud IAM permissions. For example, if you remove IAM permissions from a Google identity, then access to VM instances is revoked. Google checks permissions for every login attempt to prevent unwanted access.

Ability to import existing Linux accounts - Administrators can choose to optionally synchronize Linux account information from Active Directory (AD) and Lightweight Directory Access Protocol (LDAP) that are set up on-premises. For example, you can ensure that users have the same user ID (UID) in both your Cloud and on-premises environments.

Link

**IAM Best Practices

Authentication Overview

Firewalls

**Specifications

Firewall rules only support IPv4 traffic. When specifying a source for an ingress rule or a destination for an egress rule by address, you can only use an IPv4 address or IPv4 block in CIDR notation.

Each firewall rule's action is either allow or deny. The rule applies to traffic as long as it is enforced. You can disable a rule for troubleshooting purposes, for example.

Each firewall rule applies to incoming (ingress) or outgoing (egress) traffic, not both.

When you create a firewall rule, you must select a VPC network. While the rule is enforced at the instance level, its configuration is associated with a VPC network. This means you cannot share firewall rules among VPC networks, including networks connected by VPC Network Peering or by using Cloud VPN tunnels.

GCP firewall rules are stateful.

Firewall rules cannot allow traffic in one direction while denying the associated return traffic.

Google Cloud firewall rule actions

Always Blocked Traffic

GRE traffic:

All sources, all destinations, including among instances using internal IP addresses

Protocols other than TCP, UDP, ICMP, and IPIP Traffic between:

• instances and the Internet

• instances if they are addressed with external IP addresses

• instances if a load balancer with an external IP address is involved

Egress traffic on TCP port 25 (SMTP) Traffic from:

• instances to the Internet

• instances to other instances addressed by external IP address

Always allowed Traffic

A local metadata server alongside each instance at 169.254.169.254. This server is essential to the operation of the instance, so the instance can access it regardless of any firewall rules you configure. The metadata server provides the following basic services to the instance:

DHCP

DNS resolution, following the name resolution order for the VPC network. Unless you have configured an alternative name server, DNS resolution includes looking up Compute Engine Internal DNS, querying Cloud DNS zones, and public DNS names

Instance metadata

NTP : Network Time Protocol

Implied Rules

Implied allow egress

An egress rule whose action is allow, destination is 0.0.0.0/0, and priority is the lowest possible (65535) lets any instance send traffic to any destination, except for traffic blocked by GCP. Outbound access may be restricted by a higher priority firewall rule. Internet access is allowed if no other firewall rules deny outbound traffic and if the instance has an external IP address or uses a NAT instance.

Implied deny egress

An ingress rule whose action is deny, source is 0.0.0.0/0, and priority is the lowest possible (65535) protects all instances by blocking incoming traffic to them. Incoming access may be allowed by a higher priority rule. Note that the default network includes some additional rules that override this one, allowing certain types of incoming traffic.

Implied rules cannot be removed

Ingress rule example

Egress rule example

Firewall tags

Network tags are text attributes you can add to Compute Engine virtual machine (VM) instances. Tags allow you to make firewall rules and routes applicable to specific VM instances.

You can only add network tags to VM instances or instance templates. You cannot tag other GCP resources. You can assign network tags to new instances at creation time, or you can edit the set of assigned tags at any time later. Network tags can be edited without stopping an instance.

Targets for firewall rules

Every firewall rule in GCP must have a target which defines the instances to which it applies. The default target is all instances in the network, but you can specify instances as targets using either target tags or target service accounts.

Ingress rules apply to traffic entering your VPC network. For ingress rules, the targets are destination VMs in GCP.

Egress rules apply to traffic leaving your VPC network. For egress rules, the targets are source VMs in GCP.

Cloud Identity-Aware Proxy

Encryption

Cloud Identity

KMS

You can create up to 10 service account keys per service account to facilitate key rotation.

Security Scanner

Command Center

Cloud Storage

Storage & Databases

see /resources/freeresources/online articles

Cloud SQL

Cloud Spanner

Cloud Datastore

Cloud Bigtable

Cloud Firestore

IoT, Big Data, and Analytics

Cloud IoT Core

Cloud BigQuery

Cloud Dataproc

Cloud Datalab

Cloud Pub/Sub

Cloud Dataprep

Cloud Dataflow

Cloud Datastudio

Machine Learning & AI

ML Engine

Cloud TPUs

Vision API

Video Intelligence API

Speech API

Natural Language API

Text to Speech API

Translation API

Prediction API

Dialogflow

Tensorflow

Cloud AI

AutoML

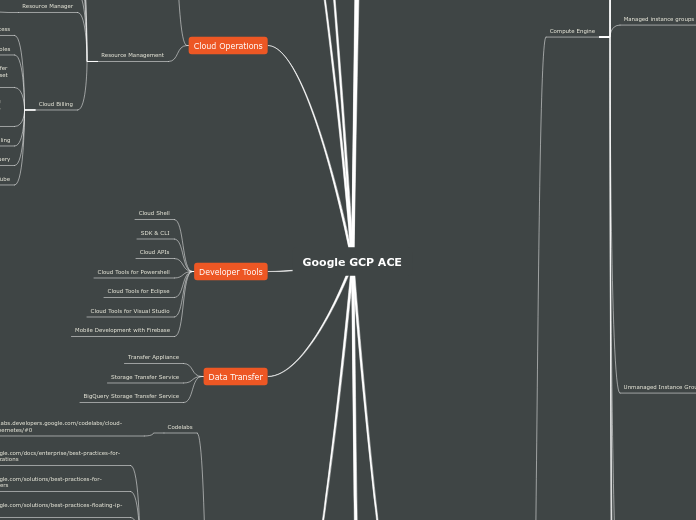

Cloud Operations

Monitoring

Resource Management

Deployment Manager

Cloud Launcher

Container Builder

Container Registry

Resource Manager

Creating&Managing Labels

Cloud Billing

Roles

*Note: Other legacy roles (such as Project Owner) also confer some billing permissions. The Project Owner role is a superset of Project Billing Manager.

*Note: Although you link billing accounts to projects, billing accounts are not parents of projects in an Cloud IAM sense, and therefore projects don't inherit permissions from the billing account they are linked to.

Custom roles for billing

Billing and Big query

YouTube

Developer Tools

Cloud Shell

SDK & CLI

Cloud APIs

Cloud Tools for Powershell

Cloud Tools for Eclipse

Cloud Tools for Visual Studio

Mobile Development with Firebase

Data Transfer

Transfer Appliance

Storage Transfer Service

BigQuery Storage Transfer Service

Resources

Codelabs

Best Practices

Training Resources

Coursera

A Cloud Guru

Kasey Shah

Free Resources

Cloud-On-Air

Cloud-on-air (Google webinars)

Google 'One minute Videos'

CIDR Notation

Drawing tools

Online Articles

DecisionTrees(medium.com)

Compute/Storage/Network/EncryptionKeys/Authentication

TransferDataSets/Tags&Labels/FloatingIP/ScopingGKE/

Storage Class/MLor SQL/Load Balancer/Identity Products

Kubernetes (various by Jon.Campos)

Devops(ReactiveOps)